In digital marketing, search engine optimisation (SEO) is crucial in driving organic website traffic. A well-executed SEO audit is the foundation for improving your website’s visibility and rankings on search engine result pages (SERPs).

While there are various tools available for conducting an SEO audit, one tool that stands out is the Screaming Frog SEO Spider. With its powerful crawling capabilities and insightful data, Screaming Frog has become a personal favourite of mine, along with plenty of other SEO professionals.

In this article, we will explore how to perform an effective SEO audit using Screaming Frog and delve into key areas within the tool that can help uncover optimisation opportunities and boost your website’s performance. Whether you’re an experienced SEO practitioner or a beginner looking to enhance your website’s visibility, this guide will equip you with the knowledge and tools you need to conduct a comprehensive SEO audit with Screaming Frog.

This article is a guest contribution from Nikki Halliwell, a freelance Technical SEO Consultant and Technical SEO Lead at Journey Further.

How to Set Up Your Crawl Configuration in Screaming Frog

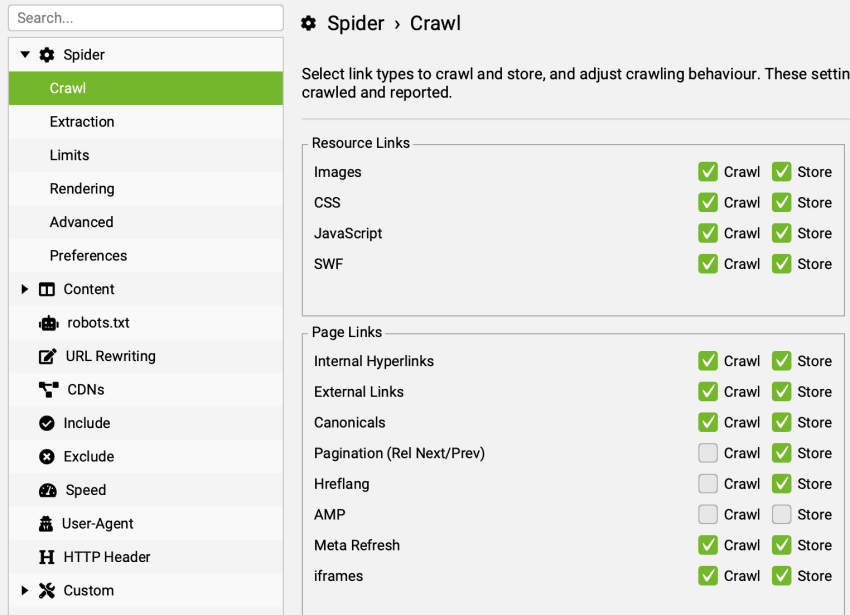

When starting a crawl, the first step is to open up the configuration settings and ensure the crawl is set up correctly and will capture the data we want. We can do this under Configuration > Crawl Config, which will bring up the Spider Configuration menu. The first section you will see is the Crawl tab.

In some cases, the settings in the Resource Links and Page Links section don’t need to change, but it is important to ensure they’re correct for the website you want to crawl. For example, if the site has an international set-up, you should ensure the crawl is set up to crawl and store hreflang data.

The same applies to the Extraction tab; make sure all of the Page Details and URL Details you want to capture are selected. Further down, you will see the Structured Data section, and as before, ensure the necessary types of schema are chosen for your website; this includes JSON-LD and schema.org validation, etc.

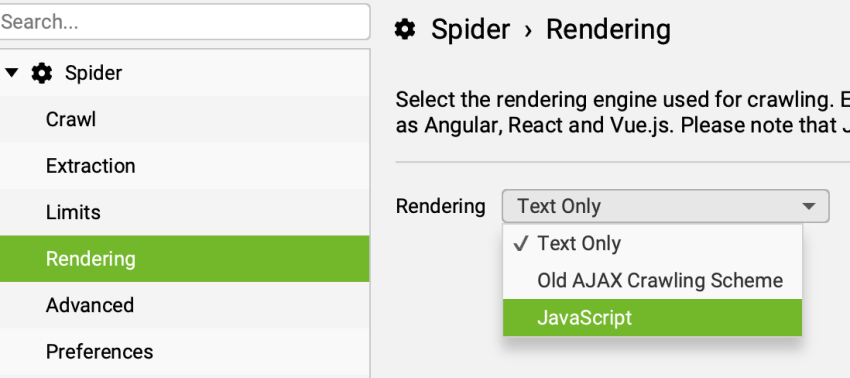

If the website you’re crawling uses JavaScript, you can turn on JS crawling under the Rendering tab. We don’t need JavaScript for this particular website, so I will leave this setting as Text Only.

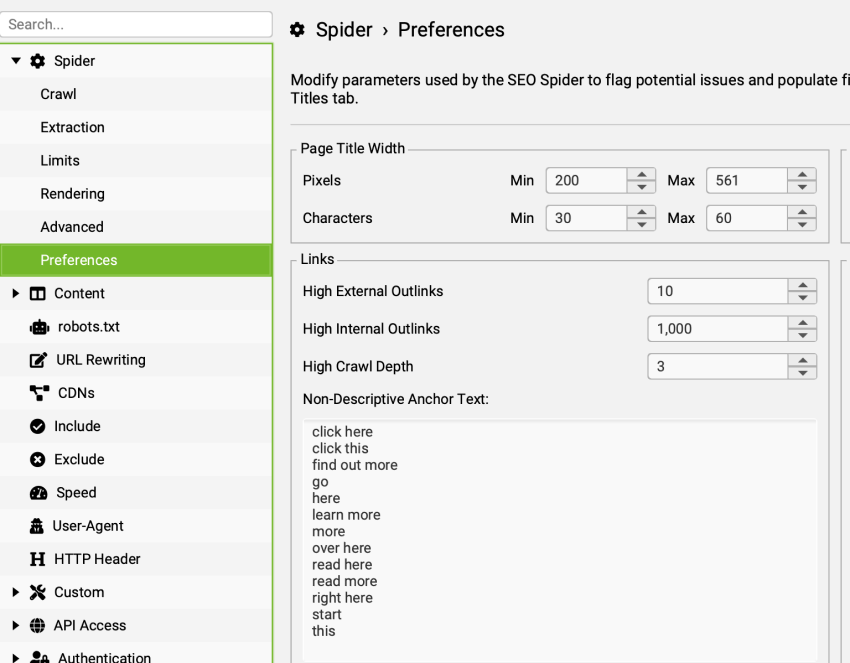

The Preferences tab is where you can adjust any settings relating to the page title, meta description length etc. The SEO Spider automatically includes examples of Non-Descriptive Anchor Text that it will search for, such as “click here.” However, if there are additional examples you would like to include, you can do that in this tab too.

Click OK once you’re happy with the settings you’ve selected.

You can also store the configuration if you know these are settings you’re likely to keep the same in most of your crawls; you can save these as your default crawl settings under File > Configuration > Save Current Configuration as Default.

Remember that the settings selected here may change when crawling and auditing a staging website.

Specifying the XML Sitemap

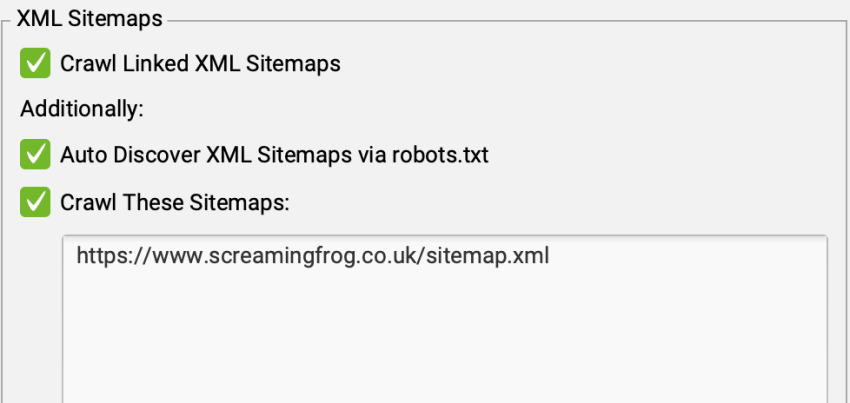

An important and useful feature is the ability to crawl the XML sitemap, which you will find in the first Crawl tab.

The XML sitemap will change with each website, and while the spider should pick up the XML sitemap as long as it is included in the robots.txt, I prefer to specify the sitemap URL/s to ensure they’re captured correctly.

Exploring Content Settings and Starting Your Crawl

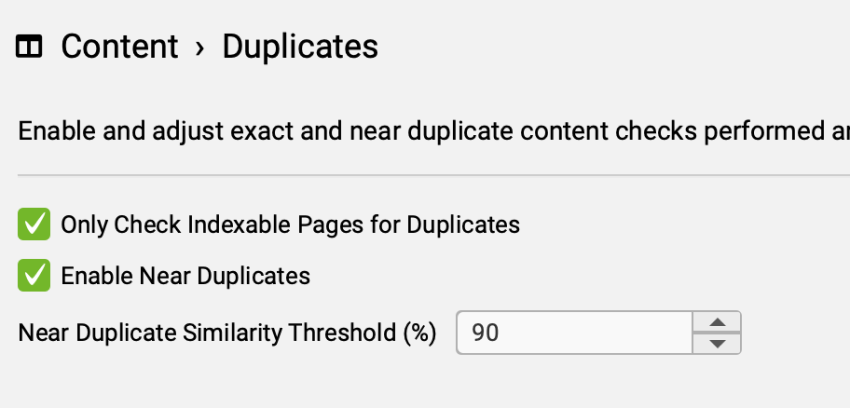

Before I start my crawl, I enable additional settings that allow Screaming Frog to detect duplicate content. We can access this under the Configuration menu > Content > Duplicates.

This will open up the Duplicates menu, where you should see the following options. If not already checked, I tick the Enable Near Duplicates option, enabling Screaming Frog to look for instances of almost duplicate content on the site that has a 90% similarity to other content. The Similarity Threshold can be changed as needed.

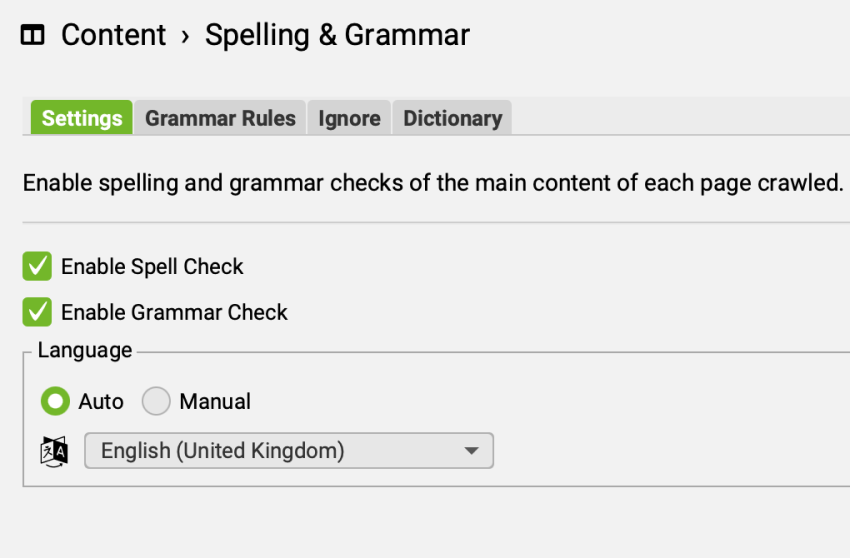

Finally, I look at the Spelling and Grammar Settings under the Crawl Config > Content > Spelling & Grammar. Here, you can enable the spelling and grammar checks and ensure the language is set to match the language used on the site. The website in question uses English and UK spellings, so I will set the language accordingly. If it had a US focus, I would set the language to English (United States of America) to account for the differences in some US spellings. Click OK once done.

As before, if you know these settings will be used on most of your crawls, these can be saved in your default crawl configuration.

Once done, you can press Start to begin your crawl.

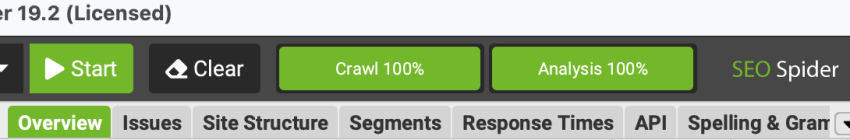

Maximising Insights with Crawl Analysis

Once the crawl has finished, it is recommended to run a crawl analysis by navigating to Crawl Analysis > Start. This analysis allows additional data to be populated, such as the link score metric for internal URLs, duplicate content, crawl discrepancies such as non-indexable URLs in the XML sitemap and more.

Running a crawl analysis is not mandatory but allows you to access otherwise unavailable data, so I recommend this step.

Screaming Frog SEO Audit: A Dive into Key Areas

By crawling your website, Screaming Frog unveils a treasure trove of data that can help identify areas for improvement and optimisation. This section will explore some key areas within Screaming Frog SEO Spider that you can use as part of a website audit. From analysing website structure to evaluating on-page elements, the crawler equips you with the necessary tools to uncover SEO opportunities and fine-tune your website for search engine success.

Page Titles and Meta Descriptions and H1s: Enhancing On-Page SEO

Page titles, meta descriptions, and H1 tags are crucial in optimising your website’s on-page SEO. With Screaming Frog, you can easily analyse these elements to ensure they are effectively optimised.

There are separate tabs for Page Titles, Meta Description and H1 that each provide a comprehensive overview of these areas. You can identify potential issues such as missing, duplicate, or overly long titles and descriptions here.

By analysing the data here, you can evaluate keyword relevance and consistency across these elements, ensuring they align with your SEO goals. As part of my audits, I like to use filters and export functionalities to help identify the largest opportunities and address the biggest SEO gaps efficiently.

Uncovering Thin Content: Identifying and Resolving Content Quality Issues

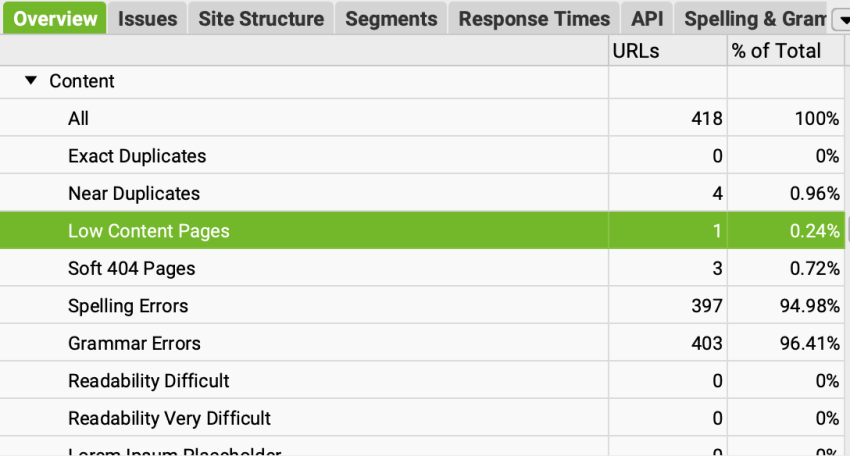

Thin content can negatively impact your website’s search visibility and user experience. Fortunately, Screaming Frog offers valuable insights to identify and resolve content quality issues efficiently.

The Content tab provides a thorough overview of your website’s content pages, including their word count, and has a section for Low Content Pages where you can easily see pages that can be expanded with content.

Screaming Frog allows you to sort pages based on word count, making it easy to pinpoint those with insufficient content. Once you have identified these pages, you can use this as a starting point for your content improvement strategy. Depending on the specific needs of each page, you can consider expanding the content by providing more in-depth information, adding relevant keywords, or improving the overall quality of the content.

In some cases, you may find similar or closely related pages with thin content. Consolidating these pages into a single, more comprehensive piece can improve the overall user experience and consolidate SEO signals, reducing the presence of thin content across your website.

By leveraging the insights provided by Screaming Frog, you can prioritise and address thin content issues. Improving the quality and depth of your website’s content helps boost your search engine rankings and enhances user engagement and satisfaction.

Investigating Duplicate Content: Finding and Resolving Issues with Screaming Frog

Duplicate content can harm your website’s search rankings and make it more difficult for search engines to determine the most relevant page they should display in SERPs for a given query.

Fortunately, Screaming Frog offers robust features to help you successfully identify and address duplicate content. Within the same Content tab from earlier, you can see instances where Exact or Near Duplicate information exists.

Take the time to sort and filter these elements, and make sure you analyse the page content itself, looking for sections or blocks of text that are replicated across multiple pages and determine for yourself if they would genuinely pose a problem before making any significant changes.

Depending on the nature of the duplicates, you can take a few approaches. For identical content on different URLs, implementing proper canonical tags or 301 redirects can help consolidate ranking signals and ensure search engines understand the preferred version of the content. In cases where similar content exists with minor variations, consider rewriting or reorganising the text to provide unique value to each page.

Identifying Broken Links and Redirects: Ensuring Smooth User Experience

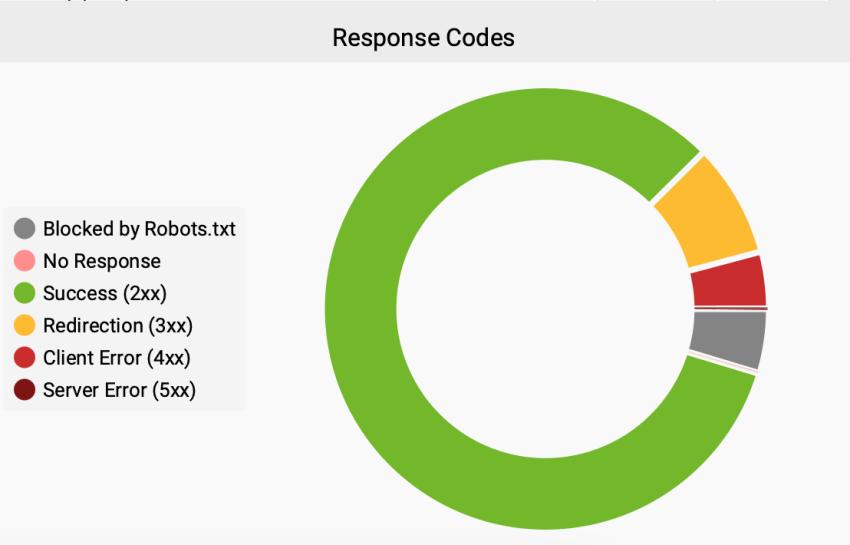

Broken links and redirects disrupt the user experience and harm your website’s SEO performance. The Response Codes tab offers powerful capabilities to help you correctly identify and resolve these issues.

Along with viewing affected URLs, there is a useful pie chart where you can set a view of the scale of URLs with each response code.

When it comes to broken links or 4xx errors, one common approach is to update or fix the URL of the broken link to redirect users to the correct destination and a working URL.

While here, we can also identify URLs that return 3xx redirect codes. The Screaming Frog SEO Spider provides details about the target URLs, allowing you to assess the effectiveness and accuracy of the redirects. If any redirects are misconfigured (perhaps they use a 302 when a 301 would be better suited) or point to outdated or irrelevant content, you can update the internal links accordingly to ensure users are sent to the most relevant pages without the need to pass through redirects.

Resolving these issues helps maintain the integrity of your website’s link structure and improves search engine crawlability, positively impacting your organic performance.

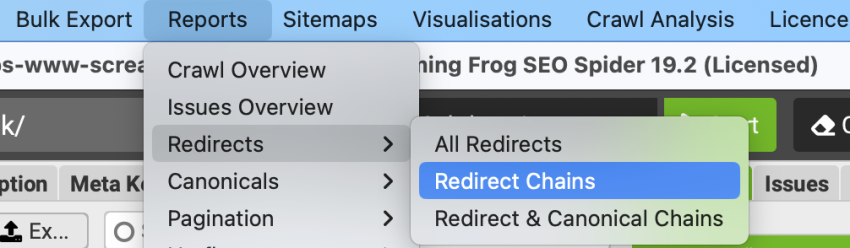

Unravelling Redirect Chains

While it is possible to find internal redirect chains within the Response Codes tab, I prefer to navigate to Reports > Redirects > Redirect Chains, to generate an exported spreadsheet report of all affected URLs.

Resolving redirect chains reduces the number of hops that users and search engines have to travel through to reach the final URL. It improves crawlability and results in a better UX.

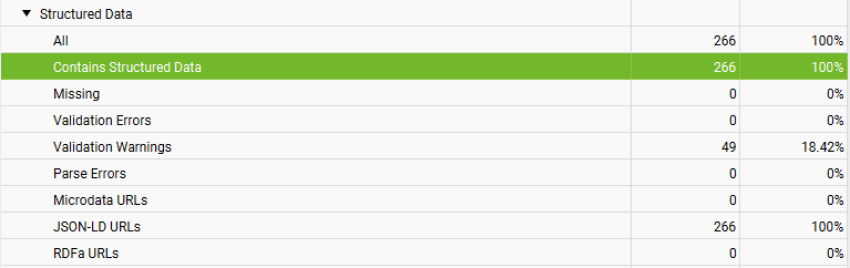

Evaluating Schema Markup: Enhancing Search Visibility

Schema markup, or structured data, is a powerful language that helps search engines understand and interpret your website’s content more effectively. To ensure optimal search visibility and improve the appearance of your website in search engine results pages, it is crucial to evaluate and optimise your schema markup.

You can easily identify pages that lack schema markup or have incorrectly implemented schema markup from within the Structured Data tab. Screaming Frog allows you to filter and sort pages based on schema markup, enabling you to focus on areas that require attention.

The detailed reports provide a granular view of each page’s schema markup, allowing you to pinpoint the specific elements or properties that need adjustment or correction.

By referring to the official schema.org documentation or using Google’s Structured Data Testing Tool, you can ensure that your schema markup adheres to the recommended guidelines and aligns with the intended content on each page.

By resolving schema markup issues, you enhance the visibility of your website in search results and increase the likelihood of rich snippets and other enhanced search features. This, in turn, can lead to higher click-through rates and improved organic traffic.

Examining URL Structure and Hierarchy: Key Considerations in Screaming Frog

Your website’s URL structure and hierarchy are essential elements that impact user experience and search engine optimisation. With Screaming Frog’s comprehensive features, you can easily examine your website’s URL structure and hierarchy to identify potential issues and make necessary improvements.

By running a crawl analysis, you gain valuable insights into the URLs of your website’s pages. You can review the structure and hierarchy of the URLs to ensure they are logical, user-friendly, and optimised for search engines. The spider allows you to analyse URL depth, length, and format.

During the analysis, you can identify URLs that contain unnecessary parameters, repetitive paths, or long and complex structures. These issues can hinder the readability and crawlability of your website by search engines.

If needed, consider implementing URL rewriting techniques, using descriptive and keyword-rich slugs, removing unnecessary parameters, and organising URLs into logical categories. Doing so creates cleaner, more intuitive URLs that are easier for users to navigate and understand. Additionally, search engines can better interpret and index your content, leading to improved organic rankings.

Reviewing Image Optimisation: Unveiling Opportunities for SEO Improvement

Optimising images on your website is crucial for improving user engagement and is one of the most common issues I encounter when looking into site speed. With Screaming Frog’s powerful capabilities, you can review and address image issues, enhancing your website’s SEO performance.

The Images tab lets you access insights such as image URLs, alt text, file sizes, and dimensions. This information allows you to identify image optimisation opportunities.

It is possible to use the Page Speed Insights API within Screaming Frog to analyse page speed and core web vitals in more detail, but for this article, we will focus on images.

Oversized images contribute to slow page load times. Screaming Frog provides the file sizes of each image and classifies any image over 100KB as large, helping you identify unnecessarily large images. You can significantly improve your website’s loading speed by compressing and resizing these images to an appropriate size without compromising quality.

Another element to consider is the presence of missing alt text for images. Alt text provides a textual description of an image, aiding accessibility and assisting search engines in understanding the image’s context. Screaming Frog helps you identify images without alt text, allowing you to add relevant and descriptive alt text to enhance accessibility and SEO.

Additionally, Screaming Frog enables you to identify broken image links. I find that by reviewing the image URLs and associated response codes, you can quickly pinpoint missing images or incorrect file paths. Replacing broken image links with the correct URLs ensures that your website’s visuals are displayed correctly and contributes to a better user experience.

Assessing XML Sitemaps: Ensuring Proper Indexation

XML sitemaps play a vital role in helping search engines crawl and index your website’s pages effectively. It is essential to assess and optimise your XML sitemaps to ensure proper indexation and visibility in search engine results.

We talked earlier about adding the XML sitemap to the Screaming Frog configuration, and now we will talk about how you can assess them. By conducting a crawl analysis, you gain insights into the completeness and accuracy of the XML sitemap(s) to ensure all relevant pages are included.

You can identify any missing or incorrect URLs in the XML sitemap(s) during the analysis. Screaming Frog provides a clear overview of the URLs that are not present or differ from the actual site structure. By identifying and resolving these issues, you ensure that search engines can discover and index all your important pages accurately.

Once you have identified XML sitemap issues, the next steps may involve the following:

- Updating the XML sitemap(s) to include missing or corrected URLs.

- Ensuring accurate metadata.

- Maintaining a well-structured hierarchy.

By leveraging Screaming Frog’s XML sitemap analysis capabilities, you can ensure proper indexation of your website’s pages and enhance its visibility in search engine results.

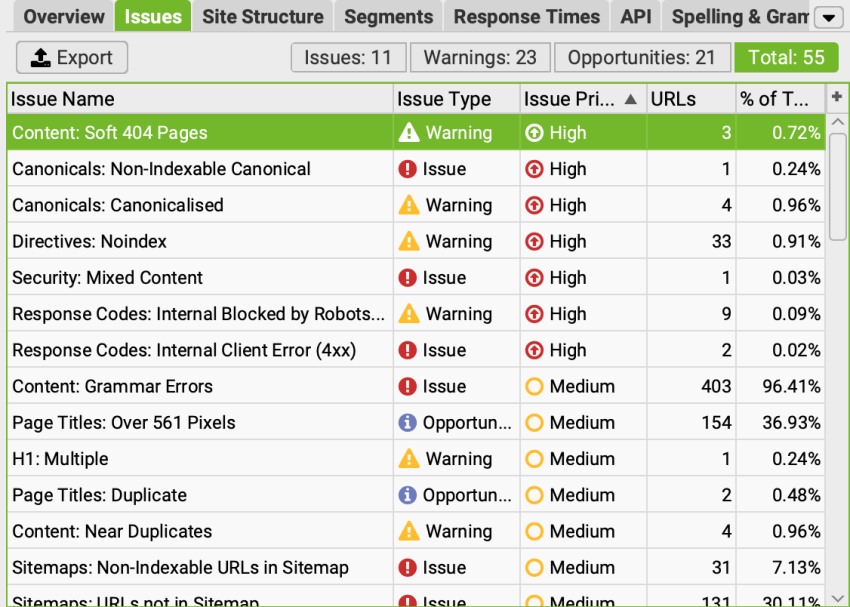

Unearthing Actionable Insights with Screaming Frog Issues

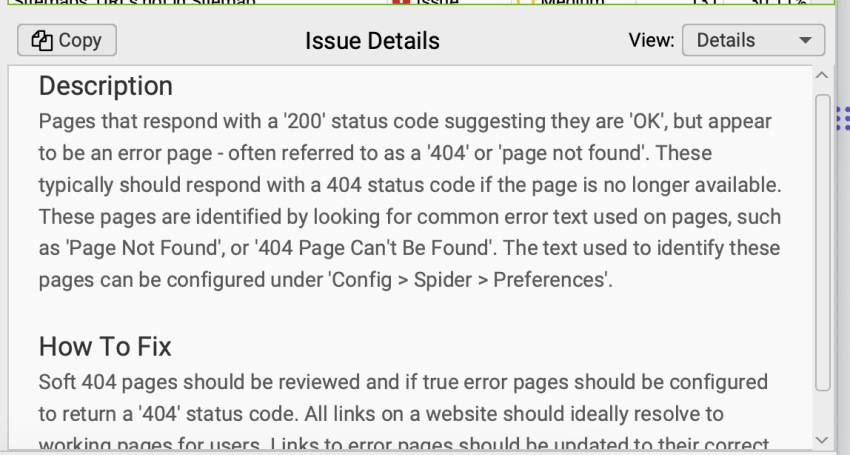

Since the release of Screaming Frog SEO Spider version 17.0, we have the Issues tab, which provides details of potential issues, warnings and opportunities discovered in the crawl.

The data included here has the issue type describing whether it’s likely an ‘Issue’, an ‘Opportunity’ or a ‘Warning’. We also get an Issue Priority showing a ‘High’, ‘Medium’ or ‘Low’ priority based upon potential impact and those that may require more attention. It is also useful to see the number of URLs affected by the issue.

If you are unsure about the meaning of the issue and how to fix it, the SEO Spider also includes in-app descriptions and details.

The Issues tab is a really useful addition to the crawler and helps us further understand each of the findings. That being said, it’s important to consider the overall context of the issue and not to regard one item as the number 1 issue on the website just because the tool says so. Other factors may be at play that have not yet been tested and could contribute to performance problems.

I enjoy this Issues tab and find it useful in determining what’s achievable and fixable within the current roadmap.

Harnessing the Potential of Screaming Frog

Screaming Frog is an invaluable tool for conducting comprehensive website audits and optimising your website for SEO performance. It is a tool I use on every website in some capacity. It enables you to assess critical areas such as crawl configuration, on-page metadata, thin content, duplicate content, broken links, redirects, URL structure, image optimisation, XML sitemaps, and more.

With its intuitive interface and comprehensive reports, Screaming Frog empowers you to dive deep into your website’s data, uncover hidden opportunities for improvement, and take proactive steps to enhance your website’s overall performance.

Leave a Reply